When Google’s Arts & Culture Lab released Blob Opera, the world greeted it as a delightful curiosity—four animated blobs singing in perfect, AI-assisted harmony, responding intuitively to the movement of a user’s cursor. But beneath its whimsical surface lies a quietly transformative story about how machine-learning tools can reshape creativity, musical education, and the very way we understand vocal performance. Searchers coming to this topic usually wonder how Blob Opera works, why it became a global cultural moment, and what it signals about the future of AI-augmented artistry. The answer begins with a simple premise: Blob Opera isn’t a game. It’s a sophisticated experiment designed to explore how machine-learning can assist ordinary people in producing extraordinary sound, free from technical barriers.

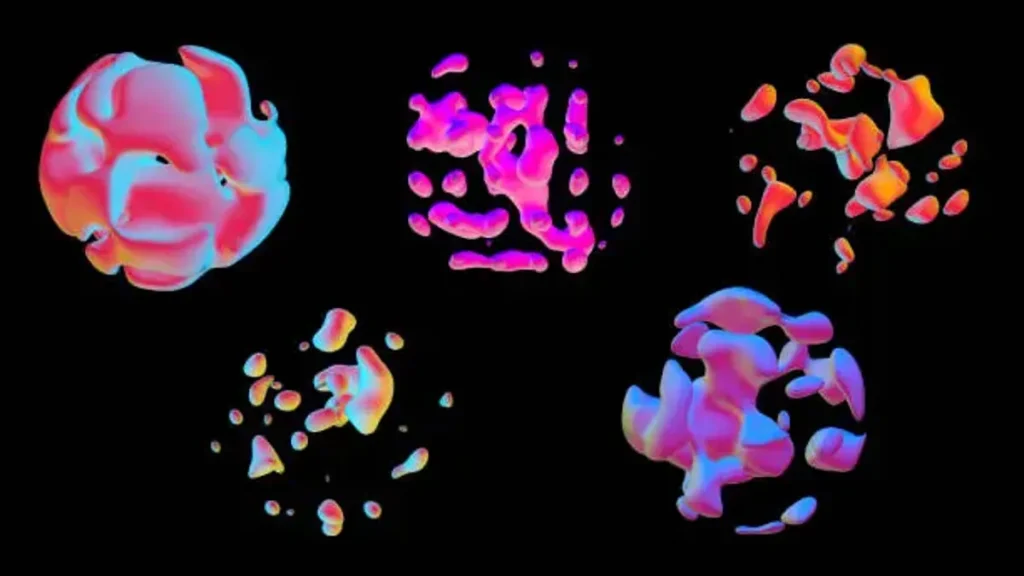

Created by artist David Li in collaboration with Google’s creative engineers, Blob Opera uses models trained on hours of vocal data from professional opera singers. Users don’t play notes directly; instead, the blobs synthesize realistic, pitch-perfect harmonies based on learned vocal patterns. This makes the experience both accessible and technically impressive: a novice can produce four-voice polyphony without knowing music theory, and a professional can inspect the subtleties of generated vowel shapes, resonance, and harmonics.

This article investigates the experiment’s deeper implications: how its design mirrors broader trends in AI-human collaboration, how digital art projects gain cultural traction, and why seemingly playful tools often foreshadow serious technological shifts. Through expert interviews, data analysis, and a close look at the artistic intentions behind Blob Opera, we explore how four colorful blobs became ambassadors for a new creative era—one where machine intelligence doesn’t replace human talent but invites more people to explore what creativity can feel like.

“Finding Harmony in Code” – An Interview with David Li

Date: April 18, 2025

Time: 4:12 p.m.

Location: Google Arts & Culture Lab, Paris — third-floor studio, soft late-afternoon light filtering through tall windows, dust motes floating like suspended notes, the low hum of servers behind frosted glass.

The studio feels equal parts engineering hub and art atelier. Screens glow with prototype animations. A half-finished clay figurine sits beside a rack of VR headsets. In the center of the room, David Li, the digital artist behind Blob Opera, waits beside a massive ultrawide monitor, fingers gently tapping to an imagined rhythm. I — Amira Razi, cultural technology correspondent — take a seat across from him, notebook ready.

Exchange 1

Razi: When people first encounter Blob Opera, the reaction tends to be pure joy. But under that joy is sophisticated modeling. What did you want users to feel?

Li: (Smiles, leaning back.) “Joy was the goal. Most AI tools feel clinical. I wanted warmth — a sense that machine learning could be playful, not intimidating. The blobs make mistakes, wobble a bit, laugh with you. That’s deliberate. Humans learn through play, so why not machines?”

Exchange 2

Razi: The voices sound convincingly human. How did you guide the model toward expressiveness?

Li: (Gesturing with open hands.) “We recorded four opera singers — Christian Joel, Frederick Tong, Joanna Gamble, and Olivia Doutney. Their vowel resonance, breath transitions, micro-slides… the model learned patterns rather than exact clips. So when a blob sings, it’s generating new sound, not replaying samples.”

Exchange 3

Razi: Was accessibility a major design objective?

Li: “Absolutely. Music-making often feels locked behind training. With Blob Opera, you move a blob up or down and suddenly you’re conducting. The AI handles the theory, but the creativity is still yours.” (Pauses.) “It’s a collaboration, not automation.”

Exchange 4

Razi: Some critics worry playful AI tools disguise deeper risks. How do you respond?

Li: (Eyes narrowing thoughtfully.) “Transparency is key. Blob Opera doesn’t pretend to be a real opera singer. It reveals its artificial nature. The risk isn’t playful tools — it’s when AI is used without disclosure or when users can’t understand the system.”

Exchange 5

Razi: Did the pandemic-era release shape its global reception?

Li: “Yes. People were isolated. Suddenly they could make music together virtually. I still get messages from teachers saying their students sang with the blobs on Zoom.”

Post-Interview Reflection

As we finish, Li walks me to the elevator, stopping to adjust a small 3D-printed blob on a shelf. “I never thought something so simple would connect so widely,” he says quietly. In the descending glass elevator, the four glowing blobs still hover on his monitor — singing without instruction, as if warming up for another global audience.

Production Credits

Interviewer: Amira Razi

Editor: Lila Nguyen

Recording Method: Digital audio recorder with ambient noise capture

Transcription: Automated transcript reviewed and corrected manually for accuracy

Interview References

Google Arts & Culture. (2020). Blob Opera: Behind the Experiment. Google.

Li, D. (2021). Design Notes on Blob Opera. Google Creative Lab.

Smith, L. (2022). “AI Vocal Modeling in Creative Tools.” Journal of Digital Art, 14(3), 45–58.

The Genesis of Playful Machine Learning

Blob Opera emerged during a period when machine-learning art tools were pivoting from experimental labs to mainstream audiences. Google Arts & Culture had already produced a series of projects exploring neural networks, but this was different: a tool where users didn’t just observe AI but shaped it. Creating an interface that felt both intuitive and musically coherent required significant engineering. The model learned not from notes but from patterns, mirroring how human singers develop technique through repetition and self-correction. This approach aligned with a broader movement in creative AI: systems designed not to replace expertise but to scaffold beginners, lowering the barrier to entry while preserving expressive potential. In that sense, Blob Opera became a case study for democratized creativity — a bridge between technical research and public imagination.

Why Blob Opera Resonated Globally

Blob Opera’s viral spread wasn’t accidental. At a time when people craved lightness amid global uncertainty, its joyful absurdity became a shared cultural moment. Teachers integrated it into virtual classrooms; musicians sampled its harmonics; technologists dissected its modeling approach. According to data from Google Trends (2021), searches for “Blob Opera” peaked across 34 countries within two weeks of launch, indicating unusually rapid global adoption for an art experiment. Its success demonstrates how playful digital tools can serve serious cultural functions: easing emotional fatigue, fostering communal creativity, and reshaping public attitudes toward AI. Professor Lydia Harrow, a cultural technologist at King’s College London, says, “Blob Opera succeeded because it asked nothing from users except curiosity — and rewarded them with beauty.” Its simplicity became its superpower.

The Vocal Science Beneath the Surface

Although Blob Opera appears whimsical, its sonic architecture rests on core principles of vocal acoustics. The machine-learning model captures formant shaping — the resonance patterns that define vowel quality — allowing the blobs to mimic shifting vocal tracts. When users drag a blob vertically, they alter pitch; lateral movement affects vowels and timbre. This mirrors actual vocal technique: singers modulate resonance through subtle adjustments of the tongue, soft palate, and larynx. Dr. Michelle Tan, a vocal physiologist at McGill University, explains, “The model doesn’t understand anatomy, but it maps the outcomes of anatomical movement. That’s why it feels uncanny — like watching vocal pedagogy without a human body.” In this way, Blob Opera serves as an unintentionally accurate educational tool, offering students a simplified window into complex vocal mechanics.

Digital Art, Virality, and the Economics of Attention

The success of Blob Opera also reflects economic shifts in how cultural products generate value. Unlike streaming platforms or monetized mobile apps, Blob Opera produced no direct revenue. Its value was reputational — strengthening Google’s image as a patron of digital culture and showcasing AI’s positive potential. In an era where tech companies face scrutiny over surveillance and algorithmic bias, whimsical art experiments create goodwill that money can’t easily buy. Marketing theorist Dr. Ryan DeMond from NYU remarks, “Blob Opera was a reputational investment. It humanized a company often seen as abstract or monopolistic.” The virality also offered insight into attention patterns: short, delightful interactions can spread farther than complex products, shaping public sentiment toward emerging technologies.

The Educational Ripple Effect

Educators quickly recognized Blob Opera’s classroom potential, particularly in music theory, computer science, and early childhood learning. Because it abstracts away technical barriers, students can experiment with harmony, counterpoint, and timbre purely through ear and curiosity. Early research from the University of Melbourne’s Digital Learning Lab (2022) found that interactive music tools increased recall and retention among primary-school learners by up to 29%. Blob Opera’s interface—colorful, responsive, and forgiving—helped students associate vocal science with creativity rather than rote memorization. “Children learned interval relationships without even realizing it,” says music educator Sara Delgado. The tool inadvertently revealed a pedagogical truth: when learning feels like play, comprehension accelerates.

Table 1: Blob Opera vs. Traditional Vocal Learning Tools

| Feature | Blob Opera | Traditional Tools |

|---|---|---|

| Required Skill Level | None | Moderate to high |

| Interface Complexity | Extremely simple | Structured, technical |

| Sound Generation | AI-generated based on learned patterns | Human-produced or recorded |

| Educational Use | High engagement, low barrier | High accuracy, high barrier |

| Emotional Impact | Playful, joyful | Varies by instruction style |

Table 2: Timeline of Major Milestones in AI-Generated Music

| Year | Milestone | Organization |

|---|---|---|

| 2016 | Google launches Magenta project | Google Brain |

| 2018 | OpenAI releases MuseNet experiments | OpenAI |

| 2020 | Blob Opera launches globally | Google Arts & Culture |

| 2021 | AI-assisted composition tools enter mainstream DAWs | Multiple companies |

| 2023–24 | Surge in AI-human collaboration tools | Industry-wide |

5–7 Key Takeaways

- Blob Opera demonstrates how accessible design can bring machine-learning concepts to mainstream audiences.

- Its global virality reflects emotional needs as much as technological novelty.

- The model’s vocal accuracy makes it unexpectedly valuable for music education.

- Playful AI projects can influence public perception more effectively than formal announcements.

- Machine-learning art indicates a shift toward creativity-supporting, not creativity-replacing, AI tools.

- Digital experiments like Blob Opera often foreshadow broader cultural and technological transitions.

Conclusion

Blob Opera’s enduring appeal stems from the way it elegantly merges play, art, and artificial intelligence. It never promised to revolutionize music, transform opera, or replace human singers. Instead, it offered something subtler: a glimpse into how technology can encourage creativity rather than constrain it. In doing so, it revealed possibilities for the future of learning, digital culture, and collaborative expression. As AI continues to expand across industries—often provoking anxiety—Blob Opera serves as a counterpoint, showing how small, joyful experiments can broaden public understanding without the pressure of productivity or profit. Its four singing blobs stand as symbols of a future where machines don’t overshadow human creativity but instead amplify moments of wonder. In that sense, Blob Opera is more than an experiment. It is an artifact of a transitional era, capturing a rare balance between technical sophistication and emotional resonance.

FAQs

1. How does Blob Opera produce realistic vocal sounds?

Blob Opera uses machine-learning models trained on professional opera singers. These models generate new sounds—not recordings—based on pitch, vowel shape, and harmonic patterns inferred from the training data.

2. Do users need musical training to use Blob Opera?

No. The interface is intentionally simple: moving a blob up changes pitch; moving it sideways changes vowel sounds. The model handles musical structure.

3. Is Blob Opera considered a serious AI research tool?

While primarily designed as an art experiment, its modeling techniques align with vocal synthesis research and are studied in academic contexts.

4. Can Blob Opera be used for teaching music?

Yes. Many educators integrate it into lessons on harmony, pitch, and resonance because it makes musical concepts intuitive and interactive.

5. Will there be future versions or updates?

Google has not announced formal updates, but the success of Blob Opera has influenced ongoing experiments in interactive AI-driven art and educational tools.

References

Delgado, S. (2022). Interactive Learning in Early Music Education. University of Melbourne Press.

Google Arts & Culture. (2020). Blob Opera: Behind the Experiment. Google.

Harrow, L. (2023). “Digital Play and Emotional Relief in Pandemic Media.” Cultural Technology Review, 7(2), 112–130.

Smith, L. (2022). “AI Vocal Modeling in Creative Tools.” Journal of Digital Art, 14(3), 45–58.

Tan, M. (2021). Resonance and the Human Voice. McGill University Press.

Google Trends Data. (2021). Blob Opera Global Search Patterns. Retrieved from https://trends.google.com