In the age of exponential data growth, where cloud computing, AI training, and real-time analytics dominate the enterprise tech landscape, conversations often orbit around software, compute power, and bandwidth. Rarely, however, do they dwell on the hardware mechanisms quietly enabling that speed and scale. Among the most critical—and least publicly understood—of these is the I/O drawer.

While it may sound like a minor technical detail, the I/O drawer is fundamental to the architecture of modern computing, especially in data centers and high-performance computing (HPC) environments. It sits physically and metaphorically between the heart of a system (its processors and memory) and its nervous system (networks and storage), managing how servers send and receive vast volumes of data with precision and efficiency.

This article delves deep into the world of the I/O drawer—what it is, how it functions, why it matters, and how it’s evolving as enterprise computing demands more speed, modularity, and scalability.

What Is an I/O Drawer?

The term “I/O” stands for input/output. In computing, this refers to the channels through which a system receives (inputs) and transmits (outputs) data. Think of it as the communication layer that lets processors talk to external storage, network interfaces, and peripheral devices.

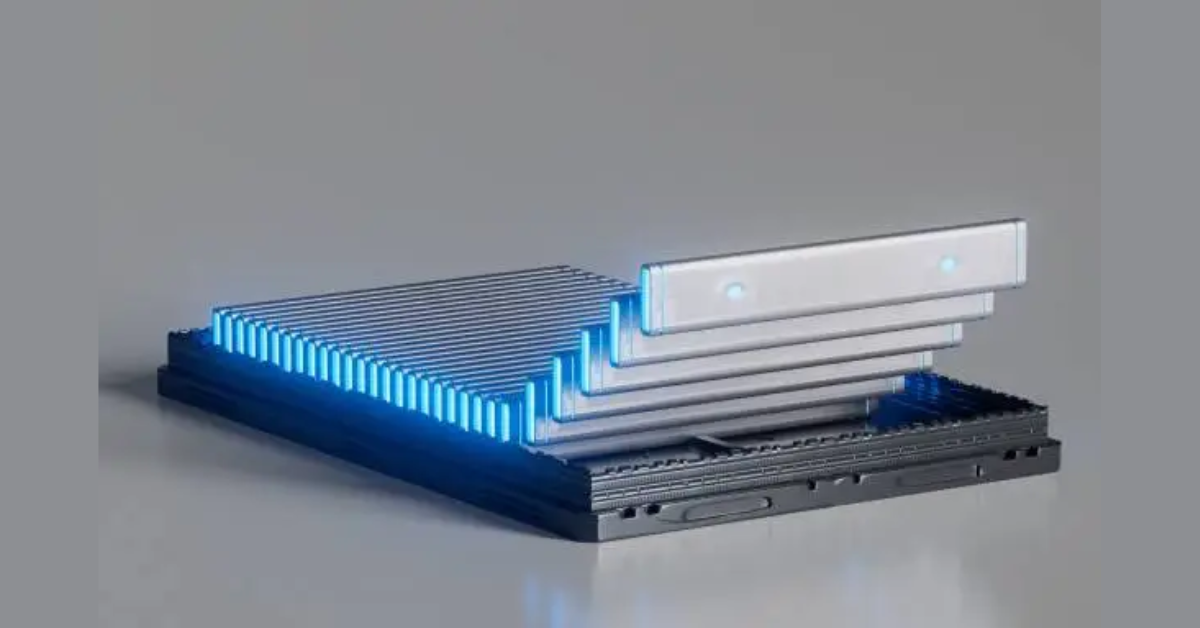

An I/O drawer is a dedicated physical enclosure or chassis that houses high-speed I/O components—network interface cards (NICs), storage adapters, switches, and sometimes specialty accelerators. Rather than being built directly into the motherboard or compute node, these components live in a modular unit, connected to the system via high-bandwidth interfaces such as PCIe (Peripheral Component Interconnect Express), NVLink, CAPI, or proprietary optical interconnects.

This architecture enables:

- Scalability: Additional I/O resources can be added without disrupting the compute core.

- Flexibility: Different I/O configurations can be tailored for specific workloads.

- Serviceability: Components can be upgraded or replaced independently of the main system.

Why It Matters: Performance, Throughput, and Control

The role of the I/O drawer becomes clearer when you consider the types of workloads that dominate modern IT:

- AI and ML model training

- Financial transaction processing

- Scientific simulations and rendering

- Cloud-native microservices across thousands of containers

All these processes involve massive data flows that need to move quickly, reliably, and without bottlenecks. The I/O drawer handles this demand by offloading high-volume data movement tasks away from the CPU and memory buses.

In practical terms, this translates to:

- Lower latency between storage/network and compute

- Higher throughput, allowing more data to move per second

- Better thermal management, since I/O heat is segregated from compute nodes

- Increased reliability, by isolating I/O faults from critical CPU tasks

Architecture and Design: What’s Inside an I/O Drawer?

An I/O drawer is not a single-purpose box. Its internal architecture is engineered for modularity and high performance. A typical drawer might include:

- Multiple PCIe slots for high-speed add-in cards

- Backplanes designed for redundancy and power efficiency

- Cooling units that manage airflow around high-heat components

- Control boards to manage diagnostics and firmware

- Cables and connectors optimized for high signal integrity

Some I/O drawers also support hot-swappable components, allowing technicians to replace or upgrade cards without powering down the server—an essential feature in environments where uptime is paramount.

Use Cases Across Industries

I/O drawers are not just found in academic supercomputers. They are essential across sectors:

- Finance: Real-time trading algorithms require extremely low-latency I/O with custom NICs.

- Healthcare: Genomic sequencing and medical imaging demand massive parallel storage throughput.

- Cloud service providers: Hyperscalers need customizable I/O layers for virtualization and container orchestration.

- Media & Entertainment: Rendering farms and post-production facilities rely on fast I/O to stream high-res assets.

The drawer architecture lets each of these industries optimize their systems without changing core processors, which is both cost-effective and operationally efficient.

I/O Drawer vs. Integrated I/O: The Case for Modularity

A common question is: Why not just integrate I/O into the motherboard or server chassis?

For small-scale or consumer-grade systems, this approach works. But in enterprise computing, modularization is essential. Here’s why:

- Upgradability: I/O standards evolve rapidly. A modular drawer lets you adopt new standards (like PCIe 6.0) without changing entire systems.

- Workload specificity: Compute nodes can remain generic, while I/O drawers are tailored.

- Thermal zones: Segregating I/O allows targeted cooling, extending component lifespan.

- Density optimization: Drawers allow more compute per rack unit by offloading I/O needs.

Innovation in I/O Drawer Technology

Modern I/O drawers are not static boxes. They are increasingly intelligent and software-defined. Some current innovations include:

- Programmable data planes, allowing real-time routing and filtering without CPU intervention

- SmartNICs (network interface cards with onboard processors) for encryption, compression, and telemetry

- FPGAs and ASICs embedded for workload-specific acceleration

- Optical I/O technologies, replacing copper to reduce latency and power consumption

Vendors are also exploring AI-enhanced thermal and power management within the drawer, using machine learning to predict and optimize performance.

Power, Cooling, and Maintenance Considerations

An I/O drawer must handle high-speed data flow without becoming a thermal choke point. As such, they often feature:

- Redundant power supplies

- Independent cooling zones

- Real-time diagnostics with onboard monitoring chips

In colocation or hyperscale data centers, I/O drawers are managed remotely, with firmware updates and component health reports accessible through management planes like Redfish or proprietary APIs.

This level of control ensures that maintenance is predictive, not reactive—a major advantage for 24/7 environments.

The Role in Disaggregated and Composable Infrastructure

As IT infrastructure moves toward composable architectures, where compute, storage, and network resources are pooled and assigned dynamically, the I/O drawer plays a foundational role.

In this model, the I/O drawer serves as a connectivity orchestrator, enabling:

- Dynamic attachment of network/storage resources to virtual machines

- Zero-downtime scaling of workloads

- More efficient use of rack space and cabling

Composable infrastructure frameworks (like HPE Synergy or Intel’s Rack Scale Design) rely on such modular I/O systems to abstract hardware from workload requirements.

Challenges and Risks

Despite their advantages, I/O drawers introduce complexity:

- Cost: They require additional power, cooling, and hardware investment.

- Interoperability: Not all add-in cards play well with all drawer backplanes.

- Latency risks: Poor cable or signal routing can nullify performance gains.

- Vendor lock-in: Some systems are proprietary, limiting user control over upgrades.

Organizations must evaluate I/O drawer use against workload requirements and budget priorities. In smaller environments, the benefit may not outweigh the complexity.

Future Outlook: Where I/O Drawers Are Headed

Looking ahead, I/O drawers are set to become smarter, denser, and more integrated with AI operations. Trends include:

- PCIe 6.0 and 7.0 compatibility

- Support for quantum interconnects in research settings

- Integration with liquid-cooled systems

- Unified management with orchestration platforms like Kubernetes

We will likely see software-defined I/O drawers, where FPGAs and smart switches reconfigure interfaces dynamically based on traffic, creating virtual interconnects in real time.

Conclusion: Infrastructure’s Quiet Workhorse

The I/O drawer is the invisible muscle behind high-throughput computing. It doesn’t get headlines or hashtags, but it enables the services, insights, and applications that define our digital age.

As demands for speed, security, and customization intensify, the humble I/O drawer will continue to evolve—quietly empowering the infrastructures that shape modern life.

FAQs

1. What is an I/O drawer in computing systems?

An I/O drawer is a modular hardware component that houses input/output resources like network cards, storage adapters, and interface modules. It connects to a server or compute node and manages high-speed data communication between the system and external devices or networks.

2. Why are I/O drawers used instead of integrating I/O directly into servers?

I/O drawers offer modularity, flexibility, and scalability. They allow system architects to upgrade or customize I/O independently from compute components, optimize thermal performance, and accommodate evolving connectivity standards like PCIe or NVLink.

3. What types of environments typically use I/O drawers?

I/O drawers are common in data centers, high-performance computing (HPC), financial services, media rendering, and scientific research—anywhere that high-throughput, low-latency data exchange is critical.

4. How do I/O drawers contribute to system performance?

By offloading I/O tasks from the main server, I/O drawers reduce latency, improve data throughput, and isolate potential points of failure. They enable optimized airflow and better manage power and thermal loads, which are vital in enterprise environments.

5. Are I/O drawers compatible across different server platforms?

Compatibility depends on the interface protocols and vendor design. Some I/O drawers follow open standards like PCIe, while others use proprietary connections, which may limit cross-platform use. Always verify compatibility with your hardware ecosystem.